Configuring TitanCNA (manually)

TitanCNA ships with various workflows that seem rather easy to configure and run. But as a newbie in bioinformatics, I consider it to be a valuable exercise to configure and get tools working from ground up. This post assumes the following starting point:

- BAM files for Normal and Tumor samples that have been through the standard pre-processing pipeline, i.e., mapping, sorting, marking duplicate, adding read groups, and indel realignment.

- Germline SNP calling using

HaplotypeCaller. - Softwares:

- alleleCount.

- HMMcopy_utils, and

- HMMcopy,

- TitanCNA,

Analysis workflow

- Identify germline SNP positions \(L = \{ t_i\}_{i=1}^{T}\), where \(T\) denotes the total number of germline SNP positions. We want to extract loci.

- Input: BAM file for normal sample.

- Output:

- Loci file, which is a tab separated file with two columns,

chr,posn. The file is not to contain any header. - TSV file with four columns,

chr,posn,ref,nonRef.

- Loci file, which is a tab separated file with two columns,

- Get allelic counts from normal and tumor samples. We will use

alleleCountprogram, which accepts tab separated filechrandposn(without column header).- Input: Output of Step 1.

- Output: Data file containing six columns,

chr,posn,ref,refCount,nonRef,nonRefCount. Output name:Normal/allele_counts.txt,Tumor/allele_counts.txt.

- Correction for GC content and mappability biases. The raw counts and depths may be biased due to GC content. This is where

HMMcopy_utilwill be useful.- Input: BAM file for Normal and Tumor samples

- Output: WIG file for read counts computed by binning Normal and Tumor samples at 1000kb. Output names:

Normal/readcounts.seg,Tumor/readcounts.seg.

- Perform CNA.

- Input:

Tumor/allele_counts.txt,Normal/readcounts.seg,Tumor/readcounts.seg. - Output: Data file containing copy number profile intervals.

- Input:

- Repeat CNA for a range of clonal clusters.

Step 1: Identify germline SNP positions from normal sample

- Input: BAM file for normal sample.

- Output: To produce file with two columns,

chr,posnand another file with four columnschr,posn,ref,alt.

We essentially need to write a simple Python script to process the output of HaplotypeCaller. Essentially, reading each line and retrieving the chr and posn of germline SNP. Python code for this procedure is avilable on my Github.

Notes

- Q: are the germline SNP loci specific to the sample or should we use a set of known positions?

- A1: TitanCNA vignette reads: “The data points of interest are the germline heterozygous SNP loci identified in the matched normal WGS sample. This is a pre-processing step that is completed by the user, and is outside of the scope of this R package.”

- A2: One can use a known loci if desired. But in the dataset that I am interested in analyzing, what I have is exome data. Therefore, some of these known loci may not have any read (or only a few), which would definitely lead to misleading copy number inference.

Step 2: Extract read depth from tumor and normal samples

- Input: loci file from Step 1.

- Output: A file containing six columns:

chr, posn, ref, refOriginal, nonRef, tumDepth, for normal and tumor sample.

We will use alleleCounter to obtain the count at each of the locus. This is just a one line script (assuming alleleCounter is installed),

alleleCounter -l path/to/loci_file_from_step1 -r path/to/hg/ -b $1/indel_realigned.bam -o $1/sample.allelecount

where $1 indicates the directory containing the BAM file and where the output is to be stored.

The output of alleleCounter needs to be further processed to form the desired output containing 6 columns. To do so, we will use the second output from Step 1 (i.e., the larger file containing 4 columns). I have written a simple script that does this. It requires helper_functions.py, which is available here.

import argparse

import os

import pandas as pd

import helper_functions as helpers

parser = argparse.ArgumentParser(description='Generate input file for TitanCNA.')

parser.add_argument('study_dir', help="Name of the directory containing the data files for a study. E.g., TNBC", type=str, default="TNBC")

parser.add_argument('patient_id', help="Patient ID. E.g., KTN126", type=str)

parser.add_argument('--data_dir', help="Data directory.", type=str, default="/proj/uppstore2018155/")

parser.add_argument('--sample_name', help="Name of the directory containing the sample to be processed. E.g., KTN1260.", type=str, default="")

parser.add_argument('--germline_snv_file', help="Name of the germline SNV file.", type=str, default="germline_snvs.tsv")

args = parser.parse_args()

patient_dir = os.path.join(args.data_dir, args.study_dir, args.patient_id)

common_dir = os.path.join(patient_dir, "common")

file_handle = open(os.path.join(common_dir, args.germline_snv_file), 'r')

next(file_handle)

loci_dict = dict()

for line in file_handle:

row = line.split('\t')

key = str(row[0]) + "_" + str(row[1])

loci_dict[key] = (row[2].strip(), row[3].strip())

if args.sample_name == "":

samples = os.listdir(patient_dir)

else:

samples = [args.sample_name]

for sample in samples:

if sample.startswith(".") or sample == "common":

continue

sample_dir = os.path.join(patient_dir, sample)

count_file = os.path.join(sample_dir, "sample.allelecount")

file_handle = open(count_file, 'r')

dat = list()

next(file_handle)

for line in file_handle:

row = line.split('\t')

key = str(row[0]) + "_" + str(row[1])

if key in loci_dict:

ref, alt = loci_dict[key]

if len(ref.strip()) > 1 or len(alt.strip()) > 1:

continue

ref_count = int(row[2 + helpers.get_idx(ref)])

alt_count = int(row[2 + helpers.get_idx(alt)])

dat.append((str(row[0]), int(row[1]), ref, ref_count, alt, ref_count + alt_count))

df = pd.DataFrame(data=dat, columns=['chr', 'posn', 'ref', 'refOriginal', 'nonRef', 'tumDepth'])

# save to file

dest_file_name = os.path.join(sample_dir, "allele_counts.txt")

df.to_csv(dest_file_name, sep="\t", index=False)

Notes

- Out of curiosity, I wanted to compare the output of

HaplotypeCallerto the counts retrieved by pysam andalleleCount.- Note that

HaplotypeCallerperforms indel realignment internally. To ensure there is as little discrepancy as possible, we should use the BAM file with indel realignment. - I chose

pysambecause it seemed easy to set up and use and I have been told that it is pretty fast.

- Note that

To retrieve the reads at a specific loci, one can use following code:

import pysam

bamfile = pysam.AlignmentFile("path/to/bam")

# Example: to get the reads at 1:949597

chromosome = '1'

start = 949597

ref = 'C'

alt = 'T'

bamfile.count('1', start-1, start)

ref_count = 0

alt_count = 0

other_count = 0

for read in bamfile.fetch('1', start-1, start):

seq = read.get_forward_sequence()

quals = read.get_forward_qualities()

pos = start - 1 - read.reference_start

if quals[pos] > 40:

if seq[pos] == ref:

ref_count = ref_count + 1

#print("%s, %i" % (seq[pos], quals[pos]))

elif seq[pos] == alt:

alt_count = alt_count + 1

#print("%s, %i" % (seq[pos], quals[pos]))

else:

other_count = other_count + 1

print("%i, %i, %i" % (ref_count, alt_count, other_count))

# Output:

# 33, 15, 10

Running the above code produced 33, 15, 10 as the output for the reference, alternate, and other bases. On the contrary, VCF file produced by HaplotypeCaller for chromosome 1 had 16 and 13 as counts for reference and alternate alleles. The discrepancy in the counts is too large to be ignored, prompting further investigation.

- In short, it can be due to the duplicates, which are marked but may not have been removed by GATK/Picard tool.

HaplotypeCallerknows to ignore them, butpysammay not. - Not satisfied, I used

alleleCounterto compute the counts at loci identified byHaplotypeCaller. It gave counts that were very close to HaplotypeCaller. For example, at position 949597, I got 16 and 12 as counts for reference and alternate allele compared to 16 and 13 from HaplotypeCaller. In general, the counts were more or less similar at different locations as well. - Conclusion: I decided to go with

alleleCounter.- Disclaimer: I’m not advocating for or against any tool. I’m sure there must be a way to configure

pysamto behave in the desired way. It’s just that I didn’t investigate and I was satisfied withalleleCounter.

- Disclaimer: I’m not advocating for or against any tool. I’m sure there must be a way to configure

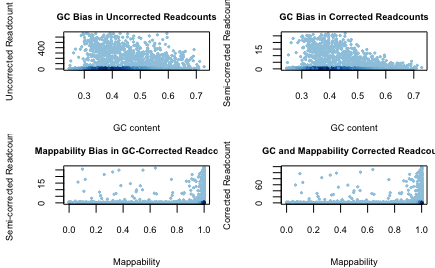

Step 3: Correction for GC content and mappability biases

HMMcopy is a package developed for correcting for GC content and mappability biases. As per TitanCNA vignette, TitanCNA seems to work extensively with WIG file formats:

“For TITAN, the user will need to generate the read depth files for the tumour and normal samples in the form of WIG files.”

We will use HMMcopy_util to obtain the read depth files for tumour and normal samples in WIG format.

Step 3-1: HMMcopy_util

Clone HMMcopy_util via command git clone https://github.com/shahcompbio/hmmcopy_utils. Follow instructions to compile the program. This requires cmake, which you can get using brew on OSX and make, which should be available as long as you have installed Xcode. There are 3 programs in HMMcopy_utils:

bin/readCounterproduces WIG file from your (tumor and normal) BAM file. Use the window size of 1,000 (this is the recommended option). I added thehmmcopy_utils/bin/toPATHin.bashrc. With that, I can issue the following command,readCounter -w 1000 path/to/normal/indel_realigned.bam > path/to/normal/readcounts.seg readCounter -w 1000 path/to/tumor/indel_realigned.bam > path/to/tumor/readcounts.segbin/gcCounterproduces WIG file from reference human genomefastafile. The HG file should be the same one that we have been using in the pre-processing steps.bin/mapCounterproduces WIG file. It accepts BigWig file format for the reference hg file.

Notes

HMMcopy_utilseems to ship GC and Mappability WIG files generated with window size of 1,000. Therefore,bin/gcCounterandbin/mapCounterneed not be run unless one wishes to use different window size.- Question: how does one obtain BigWig file from HG18/19?

- Some hint here?

Step 3-2: HMMcopy

This step can be skipped since TitanCNA has a wrapper function that calls the functions in HMMcopy but it is nice to try out the package to see exactly what it does and to catch any problems that may have occurred up to this point in the processing steps. They have a nice vignette that is easy to follow, which I paste below:

library(HMMcopy)

readfile <- "path/to/output/of/readCounter"

gcfile <- "path/to/hmmcopy_utils/data/gc_hg19.wig"

mapfile <- "path/to/hmmcopy_utils/data/map_hg19.wig"

uncorrected_reads <- wigsToRangedData(readfile, gcfile, mapfile)

corrected_copy <- correctReadcount(uncorrected_reads)

segmented_copy <- HMMsegment(corrected_copy)

par(cex.main = 0.7, cex.lab = 0.7, cex.axis = 0.7, mar = c(4, 4, 2, 0.5))

plotBias(corrected_copy, pch = 20, cex = 0.5)

par(mar = c(4, 4, 2, 0))

plotCorrection(corrected_copy, pch = ".")

par(cex.main = 0.7, cex.lab = 0.7, cex.axis = 0.7, mar = c(4, 4, 2, 0.5))

plotSegments(corrected_copy, segmented_copy)

Notes

- For TNBC data (exome data), I was not able to generate a nice figure as is given in the

HMMcopyvignette. This is because there are many regions with little or no reads and the scale for the figure is exorted as a result.

Step 4: TitanCNA

** Input: Normal/allele_counts.txt and Tumor/allele_counts.txt from Step 2.

** Output: Data file of copy number profile.

The steps for using TitanCNA are described in Section 5 of the vignette [2]. I have adapted this code for analysis of TNBC data. The complete code is available here. Here, I will briefly describe the steps that I have taken to prepare the input data. I chose not to perform GC correction for the time being.

library(TitanCNA)

library(HMMcopy)

min_depth <- 10

max_depth <- 500

infile <- "path/to/Normal/allele_counts.txt"

normal_data <- read.csv(infile, header=T, sep="\t")

infile <- "path/to/Tumor/allele_counts.txt"

tumor_data <- read.csv(infile, header=T, sep="\t")

head(normal_data)

head(tumor_data)

# combine the two data sources

data <- data.frame(chr=normal_data$chr, posn=normal_data$posn, normalDepth=normal_data$refCount + normal_data$nonRefCount, tumorDepth=tumor_data$refCount + tumor_data$nonRefCount)

dim(data)

# filter by min_depth

data <- subset(data, normalDepth >= min_depth & tumorDepth >= min_depth)

dim(data)

# compute the logR (without GC correction)

data$logR <- log(data$tumorDepth/data$normalDepth, 2)

# histogram of logR should resemble a Normal distribution

hist(data$logR, breaks = 30)

plot(1:length(data$logR), data$logR, type='l')

# now, use Titan function loadAlleleCounts to form the data that is accepted by Titan functions

titan_data <- loadAlleleCounts(tumor_data)

dim(titan_data)

# we want to trim this dataset to be the same length as data declared above

titan_data<-subset(titan_data, chr %in% data$chr & posn %in% data$posn)

dim(titan_data)

dim(data)

# now set the logR value for titan_data

titan_data$logR <- data$logR

# run the filterData function, which essentially turns X, Y into 23, 24

titan_data <- filterData(titan_data, 1:24, minDepth = min_depth, maxDepth = max_depth, map = NULL)

The inline comments describe the code in sufficient detail. But basically, I’m computing \(log_2(N^T/N^N)\), where \(N^T, N^N\) denote depth for tumour and normal samples respectively.

Notes

- I noticed that the tutorial code in the vignette is not using BAF data. I may have missed something but looking over the package documentation, it doesn’t seem like TitanCNA uses BAF data anywhere.

References

[1] Ha G, Roth A, Khattra J, Ho J, Yap D, Prentice LM, Melnyk N, McPherson A, Bashashati A, Laks E, Biele J. TITAN: inference of copy number architectures in clonal cell populations from tumor whole-genome sequence data. Genome research. 2014 Jul 24:gr-180281.

[2] TitanCNA Vignette.